Tutorial 29: Using the Alpha Channel

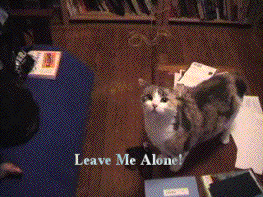

In this tutorial we'll look at how to composite two images using the alpha channel of a 4-plane char Jitter matrix as a transparency mask. We'll explore this concept as a way to superimpose subtitles generated by the jit.lcd object over a movie image.

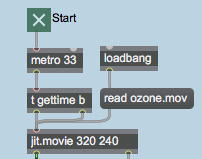

The upper-lefthand region of the tutorial patch contains a jit.movie object that reads the file ozone.mov when the patch opens. The metro object outputs a new matrix from the jit.movie object every milliseconds and polls the attribute of the object (the current playback position of the movie, in QuickTime time units) by using a trigger object:

First, we'll look at how the subtitles are being generated. Then we'll investigate how we composite them with the image from the movie using the alpha channel.

The jit.lcd object

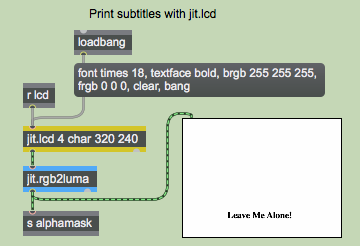

The subtitles in our patch are generated by sending messages to the jit.lcd object (at the top of the patch). The arguments to jit.lcd specify the , , and of the matrix generated by the object (jit.lcd only supports 4-plane char matrices). The jit.lcd object takes messages in the form of QuickDraw commands, and draws them into an output matrix when it receives a . We initialize our jit.lcd object by giving it commands to set its and for drawing text and its foreground () and background () color (in lists of RGB values). We then the jit.lcd object's internal image and send out an empty matrix with a :

The jit.lcd object outputs its matrix into a jit.rgb2luma object, which converts the 4-plane image output by jit.lcd into a 1-plane grayscale image. The jit.rgb2luma object generates a matrix containing the luminosity value of each cell in the input matrix. This 1-plane matrix is then sent to a send object with the name and to a jit.pwindow object so we can view it. Note that the jit.pwindow object has its attribute set to . As a result, we can see a 1-pixel black border around the white image inside.

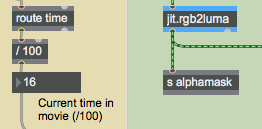

Our jit.lcd object also receives messages from elsewhere in the patch (via the receive object named attached to it). The subtitles are generated automatically by looking for certain times in the movie playback:

The jit.movie object outputs its current playback position with every tick of the metro object, thanks to the t we have between the two. The attribute is sent out the right outlet of the jit.movie object, where we can use a route object to strip it of its message selector (). We divide the value by so that we can search for a specific time more accurately. Since the metro only queries the time every milliseconds, it's entirely possible that we'll completely skip over a specific time -- dividing the time value by 100 makes it easier to find the point in the movie we want.

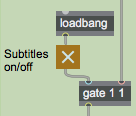

The time values are sent through a gate object where you can disable the subtitles if you so choose:

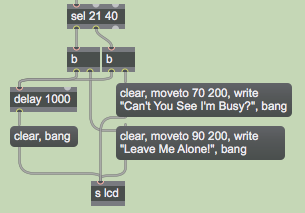

The subtitles are finally generated when the time values and make it past the gate object. The select object sends out a when those values arrive. This triggers commands from the message boxes to jit.lcd:

The message to jit.lcd erases the drawing canvas, filling all the pixels with white (our chosen background color). The message moves the cursor of the jit.lcd object to a specific coordinate from which it will draw subsequent commands. The message draws text into the matrix using the currently selected and . Once we've written in our subtitles, we send the object a to make it output a new matrix. With every subtitle, we also send a to a delay object, which clears and resends the matrix milliseconds later, erasing the title.

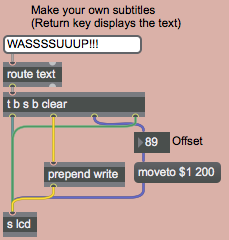

Make Your Own Titles

The region of the tutorial patch to the right (with the pink background) lets you use the textedit object to generate your own subtitles. The number box labelled Offset determines the horizontal offset for the text. The trigger object allows you to send all the necessary QuickDraw commands to the jit.lcd object in the correct order.

Now that we understand how the titles are generated, lets take a look at how they get composited over the movie.

The Alpha Channel

The alpha channel of an ARGB image defines its transparency when it is composited with a second image. If a pixel has an alpha channel of it is considered completely transparent when composited onto another image. If a pixel's alpha channel is set to it is considered completely opaque, and will show at full opacity when composited. Intermediate values will cause the pixel to fade smoothly between the first and second image. In 4-plane char Jitter matrices, data stored in plane of the matrix is considered to be the alpha channel.

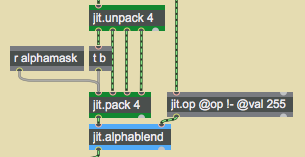

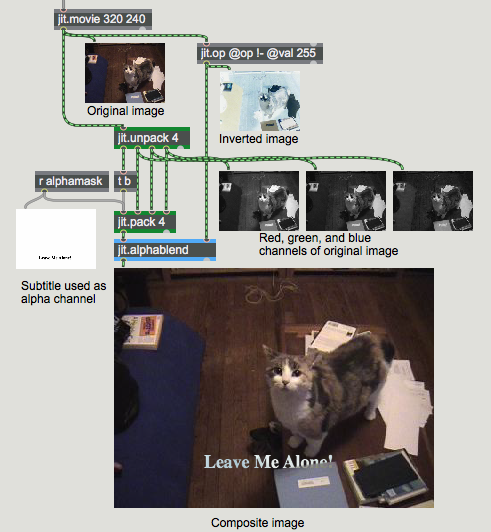

The jit.alphablend object uses the values stored in the alpha channel (plane 0) of the matrix arriving in the left inlet to perform a crossfade (on a cell-by-cell basis) between the matrices arriving in its two inlets. Our patch replaces plane of the jit.movie object's output matrix with the output of the jit.lcd object. We then use this new alpha channel with the jit.alphablend object to crossfade between the movie and an inverted copy of itself:

We use the jit.unpack and jit.pack objects to strip the original alpha channel from our movie. The 1-plane matrix containing the subtitle arrives at the jit.pack object from the receive object above it. Notice how the trigger object is used to force jit.pack to output new matrices even when no new matrix has arrived from the receive (jit.pack, like the Max pack object, will only output a matrix when it has received a new matrix or a in its leftmost inlet). The jit.op object creates a negative of the original matrix from the movie (by subtracting the matrix values from using the operator). The jit.alphablend object then uses our new alpha channel -- white values in the subtitle matrix cause the original image to be retained, while black values bring in the inverted image from the righthand matrix.

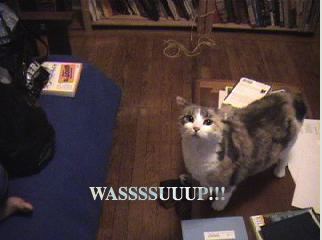

Different techniques are often used for subtitling. The technique of superimposing white text over an image (sometimes with a black border around it) is far more common than the technique used here of filling an alpha mask with an inverted image. However, doing our subtitling this way gives us a perfect excuse to use the jit.alphablend object, and may give you more legible subtitles in situations where the background image has areas of high contrast.

The image below shows the compositing process with jit.pwindow objects showing intermediate steps:

Summary

The jit.lcd object offers a complete set of QuickDraw commands to draw text and 2-dimensional graphics into a Jitter matrix. The jit.rgb2luma object converts a 4-plane ARGB matrix to a 1-plane grayscale matrix containing luminance data. You can replace the alpha channel (plane ) of an image with a 1-plane matrix using the jit.pack object. The jit.alphablend object crossfades two images on a cell-by-cell basis based on the alpha channel of the lefthand matrix.

See Also

| Name | Description |

|---|---|

| Working with Video in Jitter | Working with Video in Jitter |

| Video and Graphics Tutorial 10: Composing the Screen | Video and Graphics 10: Composing the Screen |

| Depth Testing vs Layering | Depth Testing vs Layering |

| gate | Pass input to an outlet |

| jit.alphablend | Blend two images with an alpha channel image |

| jit.lcd | QuickDraw wrapper (deprecated) |

| jit.op | Apply binary or unary operators |

| jit.pack | Make a multiplane matrix out of single plane matrices |

| jit.pwindow | Display Jitter data and images |

| jit.movie | Play a movie |

| jit.rgb2luma | Converts RGB to monochrome (luminance) |

| jit.unpack | Make multiple single plane matrices out of a multiplane matrix |

| text | Format messages as a text file |