Scheduler and Priority

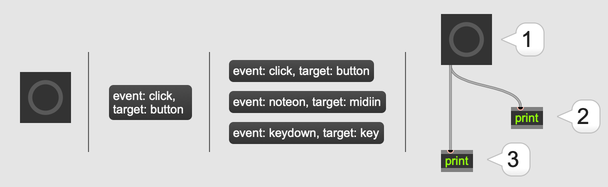

Whenever something happens in a Max patcher, it starts with an Event.

Events can be generated from different sources—for example, a MIDI keyboard, the metro object, a mouse click, a computer keyboard, etc. After an event is generated, Max either passes the event to the high-priority scheduler, or puts it onto the low-priority queue. Periodically, Max services the queue, during which it removes an event from the queue and handles it.

Handling an event usually means sending a message to an object, which might send messages to another object, until finally the event has been completely handled. The way in which these messages traverse the patcher network is depth first, meaning that a path in the network is always followed to its terminal node before messages will be sent down adjacent paths.

High Priority and Low Priority

Some events have timing information associated with them, like the ones that come from a MIDI keyboard or a metro object. We call these events high priority events, since they happen at a specific time, and Max wants to prioritize handling them at that time. These are also called scheduler events, since they are handled by Max's scheduler. All other events are low priority events. Max tries to process these as fast as possible, but not at any specific time. These are also called queue events, since Max doesn't decide when to process them based on timing information, but simply by processing them in first-in-first-out order.

Configuring the Scheduler

The two most important settings for the scheduler are Overdrive and Scheduler in Audio Interrupt. Overdrive is so important that you can toggle it directly by selecting Overdrive from the Options menu. You can also enable overdrive from the Max Preferences Window, which is also the place to enable Scheduler in Audio Interrupt.

For all other scheduler settings, including low-level, explicit control over all the details of how the scheduler operates, you can tweak the scheduler preferences.

Overdrive and parallel execution

With Overdrive enabled, Max uses two separate threads to process high priority and low priority events. This means that high priority events can be handled before a low priority event has finished executing, resulting in better timing accuracy for high priority events. Using multiple threads also has the advantage that on multi-processor machines, one processor could be executing low priority events, while a second processor could be executing high priority events.

Normally, Max processes both kinds of events in the same thread, neither one interrupting the other. A high priority event would have to wait for a low priority event to be handled completely before the high priority event itself may be executed. This waiting results in less accurate timing for high priority events, and in some instances a long stall when waiting for very long low priority events, like loading an audio file into a buffer~ object.

By default, overdrive is disabled. This is because it adds a small amount of complexity to the way patches work, since high priority and low priority messages will pass through the patcher network simultaneously. Usually you can ignore this, but sometimes it might be important to remember that with overdrive on, the state of a patcher could change in the middle of an event being processed.

In addition, the debugging features of Max only work if overdrive is disabled.

Scheduler in Audio Interrupt

When Scheduler in Audio Interrupt (SIAI) is turned on, the high-priority scheduler runs inside the audio thread. The advancement of scheduler time is tightly coupled with the advancement of DSP time, and the scheduler is serviced once per signal vector. This can be desirable in a variety of contexts, however it is important to note a few things.

First, if using SIAI, you will want to watch out for expensive calculations in the scheduler, or else it is possible that the audio thread will not keep up with its real-time demands and hence drop vectors. This will cause large clicks and glitches in the audio output. To minimize this problem, you may want to turn down poll throttle to limit the number of events that are serviced per scheduler servicing, increase the I/O vector size to build in more buffer time for varying performance per signal vector, and/or revise your patches so that you are guaranteed no such expensive calculations in the scheduler.

Second, with SIAI, the scheduler will be extremely accurate with respect to the MSP audio signal, however, due to the way audio signal vectors are calculated, the scheduler might be less accurate with respect to actual time. For example, if the audio calculation is not very expensive, there may be clumping towards the beginning of one I/O vector worth of samples. If timing with respect to both DSP time and actual time is a primary concern, a decreased I/O vector size can help improve things, but as mentioned above, might lead to more glitches if your scheduler calculations are expensive. Another trick to synchronize with actual time is to use an object like the date object to match events with the system time as reported by the OS.

Third, if using SIAI, the scheduler and audio processing share the same thread, and therefore may not be as good at exploiting multi-processor resources.

Best practices

When Overdrive is enabled, Max gives priority to timing and MIDI processing over screen drawing and user interface tasks such as responding to mouse clicks. If you are primarily going to be using Max for MIDI or audio processing, Overdrive should be enabled. If you are primarily going to be using Jitter, Overdrive should be disabled.

As for SIAI, remember that with SIAI enabled, the scheduler uses the audio sample counter, rather than "real-world time", to schedule events. If your patcher is generating events that affect your audio processing, and the sync between audio and events is important, you should enable SIAI. If it's more important that your scheduler syncs with the outside world, including synchronization with external hardware, then disable SIAI.

If you need a balance of both, use SIAI with as small an I/O vector size as you can afford to without dropouts. Just be aware that this is more demanding on your computer, and you might have to change your settings if you hear audio dropouts.

Advanced settings

Sor all other scheduler configuration, see the scheduler section in Max's preferences. This section discusses these settings in detail.

- Event Interval sets the interval, in milliseconds, at which low-priority (main thread) queue events are throttled. Historically, Max would poll for events at the event interval. Recent versions of Max avoid polling, for increased efficiency, but incoming events are throttled at the event interval. The default value of is 2 ms.

- Poll Throttle sets the number of high-priority events processed per by the scheduler at one time. High-priority events include MIDI as well as events generated by metro and other timing objects. A lower setting (e.g., 1) means less event clumping, while a higher value (e.g., 100) will result in less of an event backlog. The default value is 20 events.

- Queue Throttle sets the number of events processed per servicing of the low priority event queue (Low priority events include user interface events, graphics operations, reading files from disk, and other expensive operations that would otherwise cause timing problems for the scheduler). A lower setting (e.g. 1) means less event clumping, while a higher value (e.g. 100) will result in less of an event backlog. The default value is 10 events.

- Redraw Queue Throttle sets the maximum number of patcher UI update events to process at a time. Lower values can lead to more processing power being made available to other low-priority Max processes, and higher values make user interfaces more responsive (especially for patches in which large numbers of bpatcher objects are being used). The default value is 1000 events.

- Refresh Rate sets the minimum time, in milliseconds, between updating of the interface. A lower setting (e.g., 5) means that Max will devote more time to redrawing the screen and less to responding to user input, while a higher value (e.g., 60) will mean that the interface is faster and more responsive. The default value is 33.3 ms.

- Scheduler slop is the amount of time, in milliseconds, the scheduler is permitted to fall behind actual time before correcting the scheduler time to actual time. The scheduler will fall behind actual time if there are more high priority events than the computer can process. Typically some amount of slop is desired so that high priority events will maintain long term temporal accuracy despite small temporal deviations. A lower setting (e.g., 1) results in greater short term accuracy, while a higher value (e.g., 100) will mean that the scheduler is more accurate in the long term. The default value is 25 milliseconds.

Infinite Loops and Stack Overflow

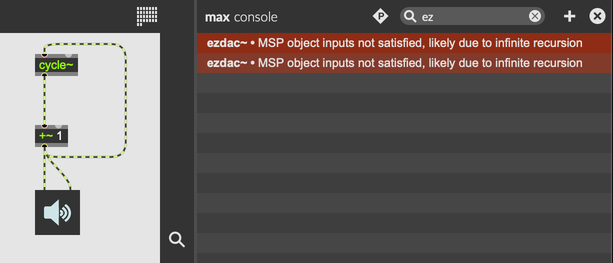

If you try to connect DSP objects in a loop, Max will post an error message to the console, and audio processing will be disabled.

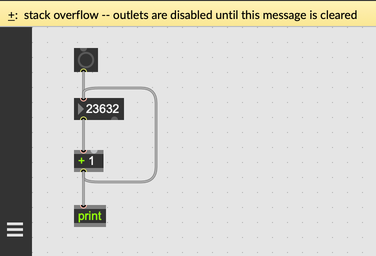

However, Max has no problem letting you make regular message connections that form a loop. In fact, this can be a useful way to process data recursively, or to implement an iterative algorithm. However, if sending a message from one object to the next causes messages to be sent forever in an infinite loop, this will cause a stack overflow.

The stack overflow results since a network containing feedback has infinite depth, and the computer runs out of memory attempting to handle the event. When Max encounters a stack overflow, it will disable all outputs until you clear the message at the top of the patcher window. This will give you a chance to look at the patcher and figure out where the stack overflow occured.

You can break an infinite loop into chunks by scheduling a new event, either using a high-priority timing object like pipe or delay, or using the low-priority deferlow object.

Starting and Stopping the Scheduler

Press ⌘. (macOS) or CTRL. (Windows) to stop the scheduler. You can also select Stop Scheduler from the Edit menu. With the scheduler stopped, timing objects like metro, pipe, and delay will no longer function. MIDI input and output objects like midiin and midiout will stop working too.

To start the scheduler again, press ⌘r (macOS) or CTRLr (Windows), or select Resume Scheduler from the Edit menu.

Stopping the scheduler is useful if you want to pause a patcher that's doing a lot of automated execution. You might have a bunch of metro objects, each on a one millisecond delay, each causing a bunch of processing to happen. By stopping the scheduler, you can temporarily disable everything, giving yourself a chance to make edits to your patcher.

Changing Priority

Certain objects will always execute messages at low priority, even when they receive a message during a high-priority event. For example, if a MIDI messages causes a buffer~ to load a new audio file, buffer~ will still load that file at low priority. Messages that cause drawing, read or write to a file, or launch a dialog box are typical examples of things which are not desirable at high priority.

You can use the defer and deferlow objects to do this same kind of deferral explicitly, generating a new event that will be handled from the low-priority queue. The defer object will put the new event at the front of the low-priority queue, while the deferlow object will put it at the back. This is an important difference, since it means that defer can reverse the order of a message sequence, while deferlow will preserve order.

The defer object will not defer event execution if executed from the low-priority queue, while the deferlow object will always put a new event at the back of the low-priority queue, even when handling a low-priority event. If it receives a message from a low-priority event, the defer object will simply pass that messages through, without putting a new event on the queue.

To move an event from the low-priority queue to the high-priority scheduler, use the delay or pipe objects to schedule a new, high-priority event. You might want to do this in order to force certain computations to happen at high priority, or to schedule an event for a specific time.

Event Backlog and Data Rate Reduction

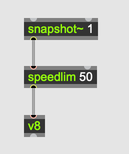

With very rapid data streams, such as a high frequency metro, or the output of a snapshot~ object with a rapid interval like 1 millisecond, it is easy to generate more events than can be processed in real time. The high priority scheduler or low priority queue can fill up will events faster than Max can process them. Max will struggle to process new events and, eventually, the whole application will crash.

One common cause of event backlog is connecting an object that generates high-priority events to one that always execute in low priority. This could occur when connecting a snapshot~ object to a v8 object. Since v8 always executes at low priority, this will push an event onto the low priority queue every time that snapshot~ generates an event.

The speedlim, qlim, and onebang objects are useful at performing data rate reduction on these rapid streams to keep up with real time. In the above case, you could use speedlim to limit the rate at which messages get sent from snapshot~ to v8.

There's nothing inherent wrong with connecting an object like snapshot~ to an object like v8. Rather, you should simply be aware of what's going on behind the scenes, so you know how to modify your patcher if it isn't behaving the way you want.