How MSP Works: Max Patches and the MSP Signal Network

Introduction

Max objects communicate by sending each other messages through patch cords. These messages are sent at a specific moment, either in response to an action taken by the user (a mouse click, a MIDI note played, etc.) or because the event was scheduled to occur (by metro, delay, etc.).

MSP objects are connected by patch cords in a similar manner, but their inter-communication is conceptually different. Rather than establishing a path for messages to be sent, MSP connections establish a relationship between the connected objects, and that relationship is used to calculate the audio information necessary at any particular instant. This configuration of MSP objects is known as the signal network.

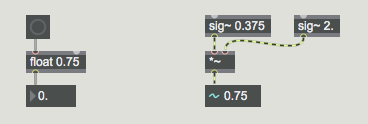

The following example illustrates the distinction between a Max patch in which messages are sent versus a signal network in which an ongoing relationship is established.

In the Max example on the left, the number box doesn't know about the number 0.75 stored in the float object. When the user clicks on the button, the float object sends out its stored value. Only then does the number box receive, display, and send out the number 0.75. In the MSP example on the right, however, each outlet that is connected as part of the signal network is constantly contributing its current value to the equation. So, even without any specific Max message being sent, the *~ object is receiving the output from the two sig~ objects, and any object connected to the outlet of *~ would be receiving the product 0.75.

Another way to think of a MSP signal network is as a portion of a patch that runs at a faster (audio) rate than Max. Max, and you the user, can only directly affect that signal portion of the patch every millisecond. What happens in between those millisecond intervals is calculated and performed by MSP. If you think of a signal network in this way -- as a very fast patch -- then it still makes sense to think of MSP objects as ‘sending’ and ‘receiving’ messages (even though those messages are sent faster than Max can see them), so we will continue to use standard Max terminology such as send, receive, input, and output for MSP objects.

Audio rate and control rate

The basic unit of time for scheduling events in Max is the millisecond (0.001 seconds). This rate -- 1000 times per second -- is generally fast enough for any sort of control one might want to exert over external devices such as synthesizers, or over visual effects such as QuickTime movies.

Digital audio, however, must be processed at a much faster rate -- commonly 44,100 times per second per channel of audio. The way MSP handles this is to calculate, on an ongoing basis, all the numbers that will be needed to produce the next few milliseconds of audio. These calculations are made by each object, based on the configuration of the signal network.

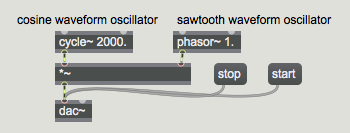

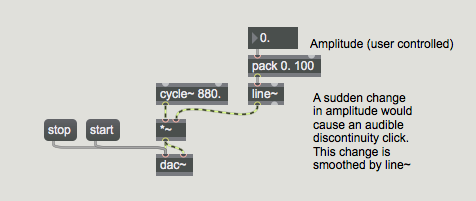

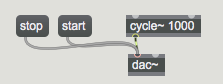

In this example, a cosine waveform oscillator with a frequency of 2000 Hz (the cycle~ object) has its amplitude scaled (every sample is multiplied by some number in the *~ object) then sent to the digital-to-analog converter (dac~). Over the course of each second, the (sub-audio) sawtooth wave output of the phasor~ object sends a continuous ramp of increasing values from 0 to 1. Those increasing numbers will be used as the right operand in the *~ for each sample of the audio waveform, and the result will be that the 2000 Hz tone will fade in linearly from silence to full amplitude each second. For each millisecond of audio, MSP must produce about 44 sample values (assuming an audio sample rate of 44,100 Hz), so for each sample it must look up the proper value in each oscillator and multiply those two values to produce the output sample.

Even though many MSP objects accept input values expressed in milliseconds, they calculate samples at an audio sampling rate. Max messages travel much more slowly, at what is often referred to as a control rate. It is perhaps useful to think of there being effectively two different rates of activity: the slower control rate of Max's scheduler, and the faster audio sample rate.

Note: Since you can specify time in Max in floating-point milliseconds, the resolution of the scheduler varies depending on how often it runs. The exact control rate is set by a number of MSP settings we'll introduce shortly. However, it is far less efficient to ‘process’ audio using the ‘control’ functions running in the scheduler than it is to use the specialized audio objects in MSP.

The link between Max and MSP

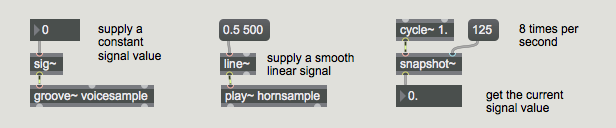

Some MSP objects exist specifically to provide a link between Max and MSP -- and to translate between the control rate and the audio rate. These objects (such as sig~ and line~) take Max messages in their inlets, but their outlets connect to the signal network; or conversely, some objects (such as snapshot~) connect to the signal network and can peek (but only as frequently as once per millisecond) at the value(s) present at a particular point in the signal network.

These objects are very important because they give Max, and you the user, direct control over what goes on in the signal network.

Some MSP object inlets accept both signal input and Max messages. They can be connected as part of a signal network, and they can also receive instructions or modifications via Max messages.

For example the dac~ (digital-to-analog converter) object, for playing the audio signal, can be turned on and off with the Max messages start and stop.

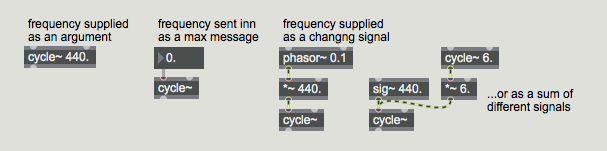

And the cycle~ (oscillator) object can receive its frequency as a Max float or int message, or it can receive its frequency from another MSP object (although it can't do both at the same time, because the audio input can be thought of as constantly supplying values that would immediately override the effect of the float or int message).

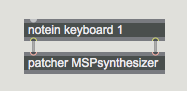

So you see that a Max patch (or subpatch) may contain both Max objects and MSP objects. For clear organization, it is frequently useful to encapsulate an entire process, such as a signal network, in a subpatch so that it can appear as a single object in another Max patch.

Limitations of MSP

From the preceding discussion, it's apparent that digital audio processing requires a lot of ‘number crunching’. The computer must produce tens of thousands of sample values per second per channel of sound, and each sample may require many arithmetic calculations, depending on the complexity of the signal network. And in order to produce realtime audio, the samples must be calculated at least as fast as they are being played.

Realtime sound synthesis of this complexity on a general-purpose personal computer was pretty much out of the question until the introduction of sufficiently fast processors such as the PowerPC. Even though contempory computers are well past the PowerPC in speed and capacity, this type of number crunching requires a great deal of the processor's attention. So it's important to be aware that there are limitations to how much your computer can do with MSP. (There's no dedicated audio processor to rescue us the way the graphics card accelerates video processing.)

Unlike a MIDI synthesizer, in MSP you have the flexibility to design something that is too complicated for your computer to calculate in real time. The result can be audio distortion, a very unresponsive computer, or in extreme cases, crashes.

Because of the variation in processor performance between computers, and because of the great variety of possible signal network configurations, it's difficult to say precisely what complexity of audio processing MSP can or cannot handle. Here are a few general principles:

-

The faster your computer's CPU, the better will be the performance of MSP.

-

A fast hard drive and a fast connection will improve input/output of audio files.

-

Turning off background processes (such as internet chat) will improve performance.

-

Reducing the audio sampling rate will reduce how many numbers MSP has to compute for a given amount of sound, thus improving its performance (although a lower sampling rate will mean degradation of high frequency response). Controlling the audio sampling rate is discussed in the Audio Input and Output chapter.

When designing your MSP instruments, you should bear in mind that some objects require more intensive computation than others. An object that performs only a few simple arithmetic operations (such as sig~, line~, +~floating-point number box, *~, or phasor~) is computationally inexpensive. (However, /~ is much more expensive.) An object that looks up a number in a function table and interpolates between values (such as cycle~) requires only a few calculations, so it's likewise not too expensive. The most expensive objects are those which must perform many calculations per sample: filters (reson~, biquad~), spectral analyzers (fft~, ifft~), and objects such as play~, groove~, comb~, and tapout~ when one of their parameters is controlled by a continuous signal. Efficiency issues are discussed further in the MSP Tutorial.

Note: To see how much of the processor's time your patch is taking, look at the CPU Utilization value in the DSP CPU Monitor. You will find it in the Extras menu.

Advantages of MSP

Your laptop or desktop machine is a general purpose computer, not a specially designed sound processing computer such as a commercial sampler or synthesizer, so as a rule you can't expect it to perform quite to that level. However, for relatively simple instrument designs that meet specific synthesis or processing needs you may have, or for experimenting with new audio processing ideas, it is a very convenient instrument-building environment. Here are some of the things MSP can let you do:

-

Design an instrument to fit your needs. Even if you have a lot of audio equipment, it probably cannot do every imaginable thing you need to do. When you need to accomplish a specific task not readily available in your studio, you can design it yourself.

-

Build an instrument and hear the results in real time. With non-realtime sound synthesis programs you define an instrument that you think will sound the way you want, then compile it and test the results, make some adjustments, recompile it, etc. With MSP you can hear each change that you make to the instrument as you build it, making the process more interactive.

-

Establish the relationship between gestural control and audio result. With many commercial instruments you can't change parameters in real time, or you can do so only by programming in a complex set of MIDI controls. With Max you can easily connect MIDI data to the exact parameter you want to change in your MSP signal network, and you know precisely what aspect of the sound you are controlling with MIDI.

-

Integrate audio processing into your composition or performance programs. If your musical work consists of devising automated composition programs or computer-assisted performances in Max, now you can incorporate audio processing into those programs. Need to do a hands- free crossfade between your voice and a pre-recorded sample at a specific point in a performance? You can write a Max patch with MSP objects that does it for you, triggered by a single MIDI message.

Some of these ideas are demonstrated in the MSP tutorials.