Tutorial 49: Colorspaces

In order to generate and display matrices of video data in Jitter, we make some assumptions about how the digital image is represented. For many typical uses (as covered by earlier tutorials) we encode color images into 4-plane matrices of type . These planes represent the alpha, red, green, and blue color channels of each cell in that matrix. This type of color representation (ARGB) is useful as it closely matches both the way we see color (through color receptors in our eyes tuned to red, green, and blue) and the way computer monitors, projectors, and televisions display it. Tutorial 5: ARGB Color examines the rationale behind this system and explains how you typically manipulate this data.

It's important to note, however, that ARGB is not the only way to represent color information in a digital form. This tutorial examines one of several different ways of representing color image information in Jitter matrices, along with a discussion of several alternatives available to us for different uses. Along the way we'll look at a simple, efficient way to texture video onto an OpenGL plane to take advantage of hardware accelerated post-processing of the video image.

At first glance, this patch looks very similar to the one we used in Tutorial 12: Color Lookup Tables. It reads a file into a jit.movie object, sending the matrices out into a jit.charmap object, where we can alter the color mapping of the different planes in an arbitrary manner by creating a matrix () that serves as a color lookup table. The processed matrix is then displayed.

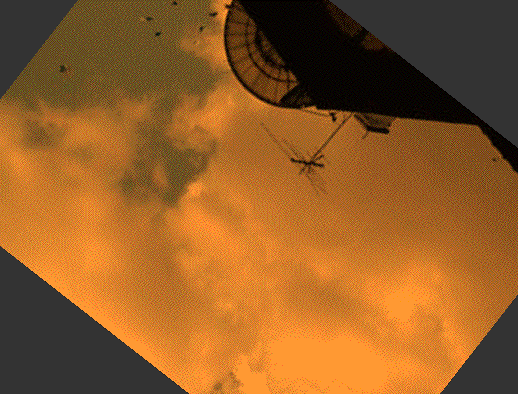

Though it isn't immediately obvious (yet), the image matrix in our patch is being generated and manipulated according to a different system of color than the ARGB mapping we're accustomed to. The jit.movie object in this patch is transmitting matrices using a called . This means that are image processing chain is working with data in a different colorspace than we usually work in, called YUV 4:2:2. In addition to transmitting color according to a different coordinate system than we usually use (YUV instead of ARGB), this mode of transmission uses a technique called chroma subsampling to reduce (by half!) the amount of data transmitted for an image of a given size.

Color Lookup with a Twist

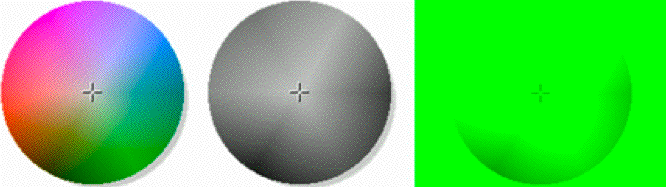

The YUV colorspace is a luminance/chrominance color system—it separates the luminosity of a given color from the chromatic information used to determine its hue. It stores the luminosity of a given pixel in a luminance channel (Y). The U channel is then created by subtracting the Y from the amount of blue in the color image. The V channel is created by subtracting the Y from the amount of red in the color image. The U and V channels (representing chrominance) are then scaled by different factors. As a result, low values of U and V will expose shades of green, while a constant medium value of both will give a grayscale image. One can convert color values from RGB to YUV using the following formula:

Y = 0.299R + 0.587G + 0.114B

U = 0.492(B - Y)

V = 0.877(R - Y)

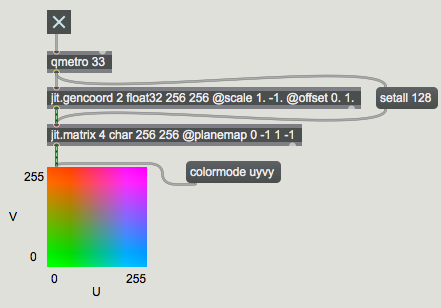

Note that the U and V components in this color space are usually signed (i.e. they can be negative numbers if the luminosity exceeds the blue or red amount, as it does with hues such as orange, green, and cyan). Jitter matrices store unsigned data, so the U and V values are represented in the range of 0-255, with 128 as the center point of the chromatic space.

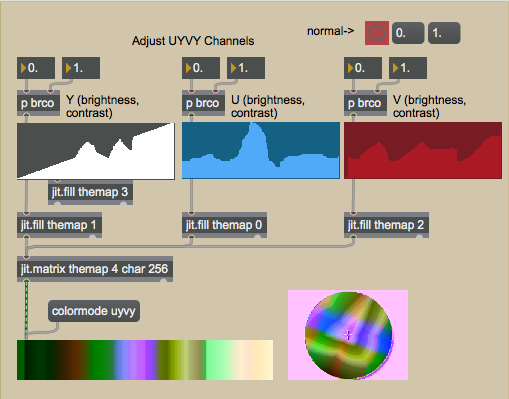

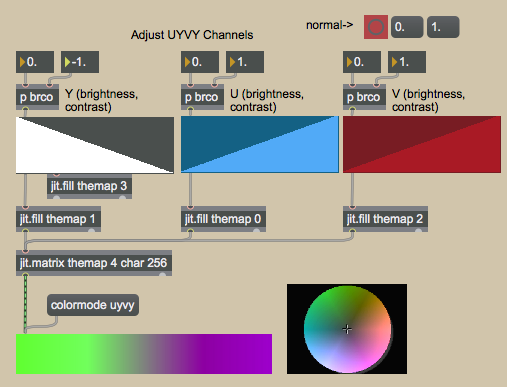

The specific implementation of the YUV colorspace used in our Tutorial patch is called YUV 4:2:2. Jitter objects that need to interpret matrix data as video (e.g. jit.movie, jit.pwindow, etc.) can generate and display this colorspace when their attribute is set to . This uses something called chroma subsampling to store two adjacent color pixels into a single cell (referred to as a “macro-pixel”). Because our eyes are more attuned to fine gradations in luminosity than in color, this is an efficient way to perform data reduction on an image, in effect cutting in half the amount of information needed to convey the color with reasonable accuracy. In this system, each cell in a Jitter matrix contains four planes that represent two horizontally adjacent pixels: plane contains the U value for both pixels; plane contains the Y value for the first pixel; plane contains the V value for both pixels; plane contains the Y value for the second pixel. The ordering of the planes () means that we can alter the luminosity of the image by adjusting planes and (for alternating pixel columns), but we can change the chrominance of pixels only in pairs (by adjusting planes and ).

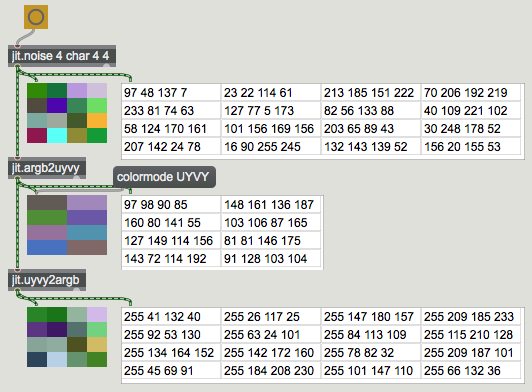

The following illustration shows how the conversion from ARGB to UYVY is handled in Jitter. Our jit.movie object performs this translation for us when necessary (see the box below), but the jit.argb2uyvy and jit.uyvy2argb objects will convert any matrix between colorspaces. Note that the alpha channel is lost in the conversion and that chromatic information is averaged across pairs of horizontal cells in the ARGB original, creating a slight loss in color information.

(note that a new, empty alpha channel is also created).

With this in mind, we can understand the processing going on in our patch. The jit.movie sends matrices in the 4-plane format to the jit.charmap, which processes the data and sends it onwards. The jit.fpsgui tells us that the of the matrices being sent to it are 160x240, which makes sense now that we understand the macro-pixel data reduction that accompanies the switch in . In addition, we can now see why the matrix has one jit.fill object feeding both planes and ; these correspond to the two Y values in the macro-pixel, which we want to share the same lookup table.

Color tinting and saturation

Because the luminosity is separate from the chrominance in our new colorspace, it is a simple matter to invert the brightness of pixels without affecting their hue. This would be a more involved procedure if working in ARGB.

By inverting the U and V channels' color lookup, we perform a 180-degree hue rotation on the image. Setting both of these channels to a constant value desaturates the image so that it appears at a constant chroma, or hue, according to the Cartesian space shown earlier in this tutorial. Values of will desaturate the image to greyscale; values of will make the entire image appear green.

Videoplane

The jit.gl.videoplane object textures the Jitter matrix sent into its inlet onto a plane in an OpenGL drawing context. Our drawing context () is being driven by the jit.gl.render object at the top of the patch, and is viewable through the jit.window object's window. If you need to review the basics of OpenGL rendering in Jitter, a look at Tutorial 30: Drawing 3D text will fill you in on the basics of creating a rendering system. One thing of note is that the attribute of jit.gl.render, when set to , renders our scene in an orthographic projection (i.e. there is no sense of depth). Also note that the jit.gl.videoplane object, not the jit.window, needs to be told to interpret texture matrices as through its attribute. In our patch we really aren't using OpenGL for 3D modeling; but we are taking advantage of some features of hardware accelerated texture mapping.

Notice that the jit.pwindow object shows a massively downsampled and pixelated image (It's actually processed as an 8x16 matrix since we're still in mode). The jit.window object, however, shows an image where the pixels are smoothly interpolated into one another (the effect is similar to upsampling in a jit.matrix object with the attribute set to ).

This interpolation is occurring on the hardware Graphics Processing Unit (GPU), and is one of the many advantages to using OpenGL to display video, as it causes no performance penalty on the main CPU of our computer.

When you send a jit.window object into mode, the jit.gl.videoplane upsamples the texture even further, giving you the smoothest possible interpolation for your display.

Videoplane Post-Processing

We can see that the and attributes (as well as , , etc.) of most OpenGL objects also apply to jit.gl.videoplane. As a result, jit.gl.videoplane is an incredibly useful object for video processing, as it allows us to apply processing to the image directly on the GPU.

Summary

The jit.movie object can output matrices in a number of colorspaces beyond ARGB. The YUV 4:2:2 colorspace can be used by setting the attribute of the objects that support it (jit.movie, jit.pwindow and jit.window) to . The has the advantage of using a macro-pixel chroma subsampling to cut the data rate in half, allowing for matrices to be processed faster in the Jitter matrix processing chain. Since the data output in this colorspace is still 4 planes of information, standard objects such as jit.charmap can be used to manipulate the matrix, albeit with different results.

The jit.gl.videoplane object accepts matrices (including matrices) as textures that are then mapped onto a plane in the OpenGL drawing context named by the object. GPU accelerated processing of the image can therefore be done directly on the plane, including color tinting, blending, spatial transformation, etc. In Tutorial 41: Shaders we saw ways to apply entire processing algorithms to objects in the drawing context, further expanding the possibilities of using the GPU for processing in Jitter.

See Also

| Name | Description |

|---|---|

| Working with Video in Jitter | Working with Video in Jitter |

| GL Texture Output | GL Texture Output |

| jit.argb2uyvy | Convert ARGB to UYVY |

| jit.charmap | Map 256-point input to output |

| jit.fill | Fill a matrix with a list |

| jit.gencoord | Evaluate a procedural basis function graph |

| jit.gl.render | Render Jitter OpenGL objects |

| jit.gl.videoplane | Display video in OpenGL |

| jit.matrix | The Jitter Matrix! |

| jit.noise | Generate white noise |

| jit.pwindow | Display Jitter data and images |

| jit.movie | Play a movie |

| jit.uyvy2argb | Converts UYVY to ARGB |

| multislider | Display data as sliders or a scrolling display |